It is convenient to have a geocoded dataset to work with when developing visualization tools. Although batch geocoding should be done in methods other than webservice calls, it is nice to have a command-line tool that we can call as needed. Below is a description of one such tool. Here is also included descriptions of various scripts utilized to prepare the dataset.

Let's play with a dataset from NYC Open Data. One of the dataset that is available is SAT Scores for NYC public schools. Let's download the CSV from here: https://nycopendata.socrata.com/Education/SAT-College-Board-2010-School-Level-Results/zt9s-n5aj

{% highlight bash %} wget https://nycopendata.socrata.com/api/views/zt9s-n5aj/rows.csv?accessType=DOWNLOAD -O raw_dataset.csv {% endhighlight %}

The first line of the file contains the column names. Let's use that information to create a database table in which we can save it.

{% highlight sql %}

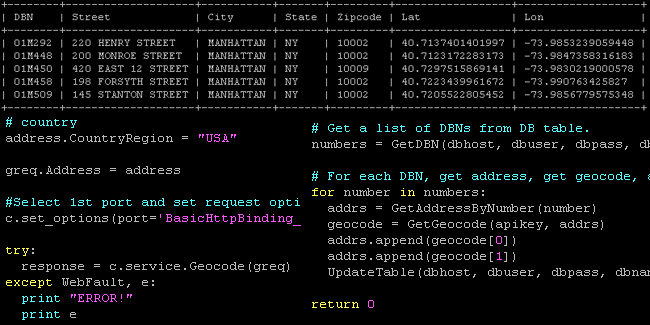

CREATE TABLE ds_NYC_SAT_2010 ( id int(11) AUTO_INCREMENT PRIMARY KEY, DBN varchar(255), SchoolName varchar(255), NumTestTakers varchar(255), CriticalReading varchar(255), Math varchar(255), Writing varchar(255), Street varchar(255), City varchar(32), State varchar(15), Zipcode varchar(10), Lat varchar(32), Lon varchar(32) );

{% endhighlight %}

In order to load the file into this table, we will need to remove the first line (the column names) and put the file in the /tmp directory so the mysql user can access it.

{% highlight bash %} sed 1d dataset.csv > /tmp/NYC_SAT_2010-1d.csv {% endhighlight %}

Let's load it into the table:

{% highlight sql %} LOAD DATA LOCAL INFILE '/tmp/NYC_SAT_2010.csv' INTO TABLE ds_NYC_SAT_2010 FIELDS TERMINATED BY ',' LINES TERMINATED BY '\n' (DBN,SchoolName,NumTestTakers,CriticalReading,Math,Writing); {% endhighlight %}

It just so happens that this dataset does not contain addresses for the schools. The addresses for the schools are available on school-specific pages like this one: http://schools.nyc.gov/SchoolPortals/01/M292/default.htm. However, the dataset contains what they call a DBN. That number happens to be contained in the URL. So what we can do is get the DBN from our newly created table and parse the contents of the URL. We will use the HTMLParser class in Python (http://docs.python.org/library/htmlparser.html).

{% highlight python %} #!/usr/bin/python

import sys import urllib2 from HTMLParser import HTMLParser

class MyHTMLParser(HTMLParser):

def init(self): HTMLParser.init(self) self._tag = "" self._name = "" self._value = ""

self.street = ""

self.city = ""

self.state = ""

self.zipcode = ""

def handle_starttag(self, tag, attrs): self._tag = tag

for name, value in attrs:

self._value = value

def handle_data(self, data): if self._tag == 'span': if 'lblSchoolStreet' in self._value: if self.street == "": self.street = data elif 'lblSchoolCity' in self._value: if self.city == "": self.city = data elif 'lblSchoolState' in self._value: if self.state == "": self.state = data elif 'lblSchoolZip' in self._value: if self.zipcode == "": self.zipcode = data

def GetAddressByNumber(DBN):

declare list to hold the address

addrList = list()

extract the first two characters

sid = DBN[:2]

extract the rest of the string

snum = DBN[len(sid):]

domain = "http://schools.nyc.gov/" url = domain + "SchoolPortals/{0}/{1}/default.htm".format(sid, snum)

content = urllib2.urlopen(url).read()

parser = MyHTMLParser() parser.feed(content)

addrList.append(parser.street) addrList.append(parser.city) addrList.append(parser.state) addrList.append(parser.zipcode)

parser.close()

return addrList {% endhighlight %}

Once we have the addresses, we can fetch the geocodes. There's a script at http://rbrundritt.wordpress.com/2010/01/23/accessing-bing-maps-web-services-with-perl-and-python/ that we can modify and use for that purpose. But we will need to install a few libraries before we can call the web service.

{% highlight bash %} sudo apt-get install python-setuptools wget https://fedorahosted.org/releases/s/u/suds/python-suds-0.3.7.tar.gz tar -xzf python-suds-0.3.7.tar.gz cd python-suds-0.3.7 sudo python setup.py install {% endhighlight %}

And here's the script that has been modified for our purpose:

{% highlight python %} #!/usr/bin/python

import sys import urllib2 from suds.client import Client from suds.client import WebFault

def GetGeocode(key, arrAddr): _geocode = list() application_key = key

domain = 'http://dev.virtualearth.net' virdir = '/webservices/v1/geocodeservice/' wsdl = 'geocodeservice.svc?wsdl' url = domain + virdir + wsdl

c = Client(url)

greq = c.factory.create('GeocodeRequest')

#Credentials cred = c.factory.create('ns0:Credentials') cred.ApplicationId = application_key greq.Credentials = cred

#Address address = c.factory.create('ns0:Address')

street

address.AddressLine = arrAddr[0]

city

address.Locality = arrAddr[1]

state

address.AdminDistrict = arrAddr[2]

country

address.CountryRegion = "USA"

greq.Address = address

#Select 1st port and set request options: c.set_options(port='BasicHttpBinding_IGeocodeService')

try: response = c.service.Geocode(greq) except WebFault, e: print "ERROR!" print e

locations = response['Results']['geocoderesult'][0]['locations']['GeocodeLocation']

for loc in locations: _geocode.append(loc['Latitude']) _geocode.append(loc['Longitude'])

return _geocode {% endhighlight %}

Of course, we will need to save all this data to our table. In order to use the Python MySQL libraries, we need to install them.

{% highlight bash %}

install python-dev (needed to build MySQL-Python)

sudo apt-get install python-dev

sudo apt-get install libmysqlclient-dev

download MySQL-python

wget http://downloads.sourceforge.net/project/mysql-python/mysql-python/1.2.3/MySQL-python-1.2.3.tar.gz?r=http%3A%2F%2Fsourceforge.net%2Fprojects%2Fmysql-python%2F&ts=1340730391&use_mirror=superb-sea2 -o MySQL-python-1.2.3.tar.gz tar xzf MySQL-python-1.2.3.tar.gz cd MySQL-python-1.2.3 sudo python setup.py build sudo python setup.py install {% endhighlight %}

And here's the code for retrieving and saving the data to our table:

{% highlight python %} #!/usr/bin/python

import sys import MySQLdb as mdb

def GetDBN(dbhost, dbuser, dbpass, dbname, limit): conn = None dbnlist = list()

try: conn = mdb.connect(dbhost, dbuser, dbpass, dbname) cursor = conn.cursor()

cursor.execute("""

SELECT DBN

FROM ds_NYC_SAT_2010

LIMIT %s

""", (limit)

)

rows = cursor.fetchall()

for row in rows:

dbnlist.append(row[0])

cursor.close()

conn.commit()

return dbnlist

except mdb.Error, e: print "Error %d: %s" % (e.args[0], e.args[1]) sys.exit(1)

finally: if conn: conn.close()

def UpdateTable(dbhost, dbuser, dbpass, dbname, arrAddr, DBN):

conn = None

try: conn = mdb.connect(dbhost, dbuser, dbpass, dbname) cursor = conn.cursor()

cursor.execute("""

UPDATE ds_NYC_SAT_2010

SET

Street=%s,

City=%s,

State=%s,

Zipcode=%s,

Lat=%s,

Lon=%s

WHERE DBN=%s

""",

(

arrAddr[0],

arrAddr[1],

arrAddr[2],

arrAddr[3],

arrAddr[4],

arrAddr[5],

DBN

)

)

cursor.close()

conn.commit()

except mdb.Error, e: print "Error %d: %s" % (e.args[0], e.args[1]) sys.exit(1)

finally: if conn: conn.close() {% endhighlight %}

Now that we have all our tasks in place, we can call them from a driver:

{% highlight python %} #!/usr/bin/python

import sys from Database import GetDBN from Database import UpdateTable from AddressParser import GetAddressByNumber from Geocode import GetGeocode

def main(argv=None): """Main function to drive the process. """ dbhost = '_server' dbuser = '_user' dbpass = '_pass' dbname = "_dbname" apikey = "AAAAAABBBBBBBBBBBCCCCCCCCCCCCCCCCDDDDDDDDDDDDDDDDEEEEEEEEEEEEFFF"

Get a list of DBNs from DB table.

numbers = GetDBN(dbhost, dbuser, dbpass, dbname, 5)

For each DBN, get address, get geocode, and update table.

for number in numbers: addrs = GetAddressByNumber(number) geocode = GetGeocode(apikey, addrs) addrs.append(geocode[0]) addrs.append(geocode[1]) UpdateTable(dbhost, dbuser, dbpass, dbname, addrs, number)

return 0

if name == "main": sys.exit(main()) {% endhighlight %}

That's it! Now we can go ahead and build the cool stuff…the tools that can make this data useful!